Image-based characterization is critical for understanding and fully utilizing energy resources in petroleum source rocks. Shale formations are particularly challenging due to their highly multiscale nature: features at length scales across 10 orders of magnitude can all affect the recovery factor from a shale reservoir. Image-based scale translation methods have the potential to understand features across length scales using non-destructive imaging methods while preserving samples for further experimentation.

Image-based scale translation includes translation between scales (upscaling and downscaling), modalities (image translation), and synthesis. My research has focused largely on three types of image-based scale translation:

- Modality translation: predicting high-resolution microscopy images and image volumes from non-destructive CT images

- Data synthesis: generating synthetic images of source rocks for quantification of petrophysical properties

- Image downscaling (single image super-resolution): predicting a high resolution image from low-resolution inputs

I have primarily focused on developing deep learning-based methods for each of these tasks, specifically using generative adversarial networks (GANs) and generative flow models.

Modality Translation

Image-based characterization relies on acquisition of high-resolution images usable for applications such as organic matter segmentation or pore network extraction. An important imaging method for this is focused ion beam-scanning electron microscopy (FIB-SEM), where a rock sample is milled down slice-by-slice and SEM images are obtained for each step. This method creates very high-resolution rock images, but destroys the rock sample in the process. Nanoscale computed tomography (nano-CT) meanwhile offers nanoscale resolution while preserving the sample, but has much lower resolution than FIB-SEM images and much more noise.

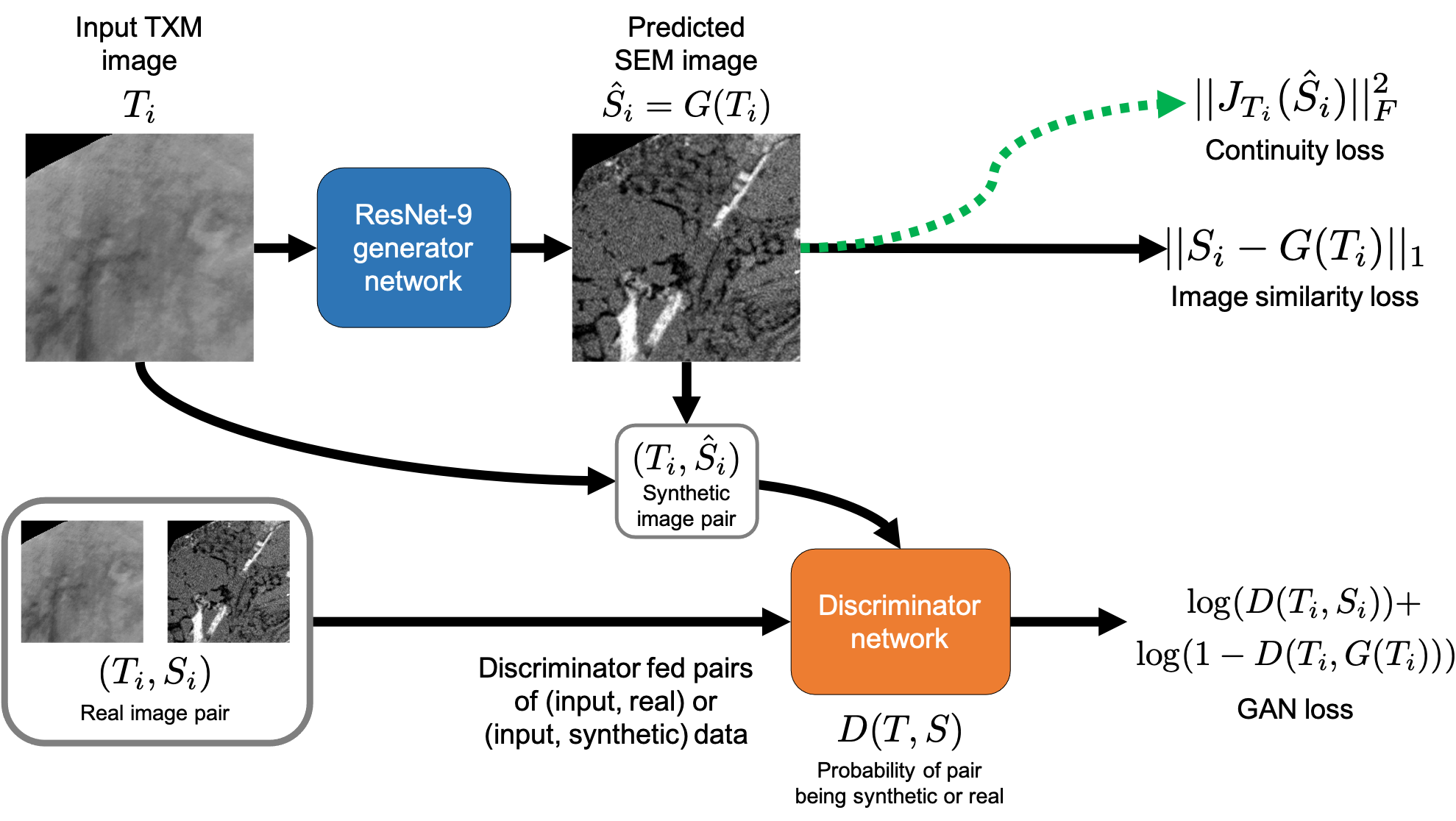

In this project, we want to apply deep learning learning techniques to predict high-resolution FIB-SEM images using non-destructive nano-CT image. We are focused on two main challenges:

- 2D-to-2D image translation for paired training data

- Translation of 3D nano-CT volumes when only 2D paired training data is available

Addressing these relies on deep learning techniques. Specifically, for (1), we use CNN, pix2pix, SR-CNN, and SR-GAN models, and for (2) we employ a combination of new regularization terms during model training and morphological post-processing:

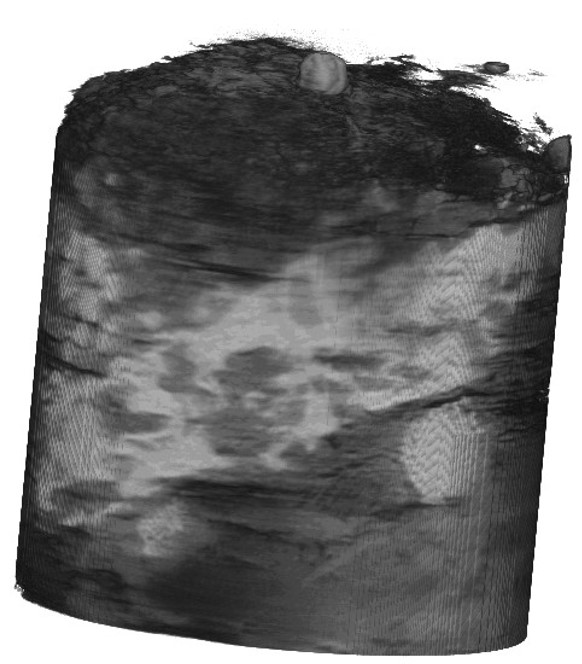

Using these techniques, we are able to reconstruct FIB-SEM volumes from input TXM image slices:

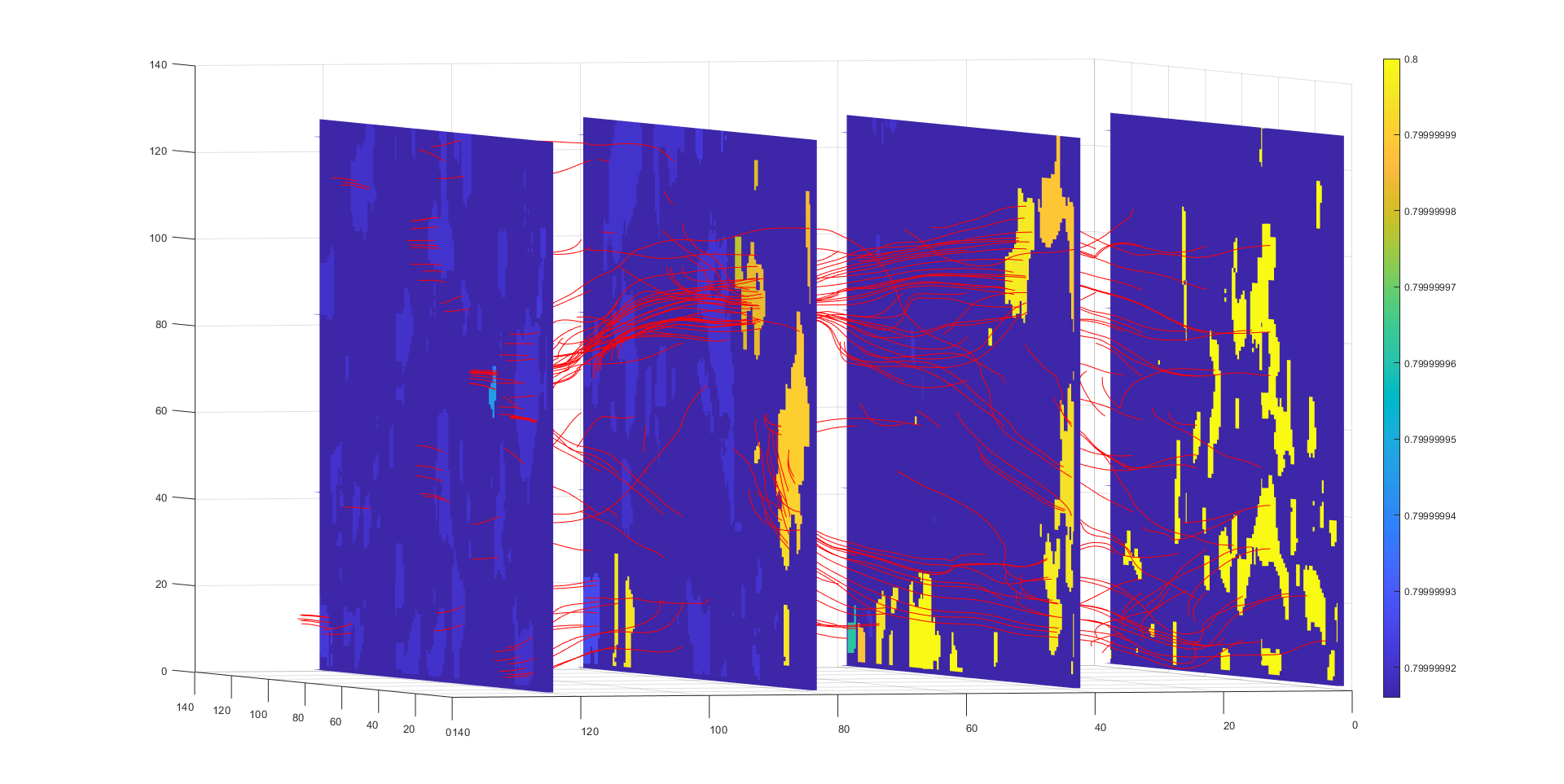

These volumes can then be segmented and used as a simulation domain to compute flow and permeability properties using a Lattice-Boltzmann solver:

Source Rock Image Synthesis

Digital rock physics techniques allow for computation of petrophysical properties directly from image data. However, a persistent challenge in image-based characterization is obtaining sufficient image data to accurately estimate and quantify uncertainty in computed morphological and flow properties. A possible solution to this is to train a generative model for the rock images, then compute properties by sampling images from this model. Existing approaches have shown much success for synthesizing rock images, but these methods are not generlized for:

- Generating grayscale 3D image volumes from 2D training data

- Generating multimodal image volumes, particularly when only 2D input data is available

These two problems are of particular interest for image-based characterization of shales, where 2D microscopy data is often the only data available, and the grayscale pixel/voxel values carry important information about the composition and properties of the sample.

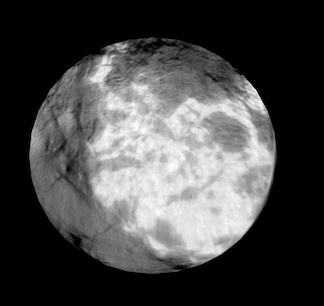

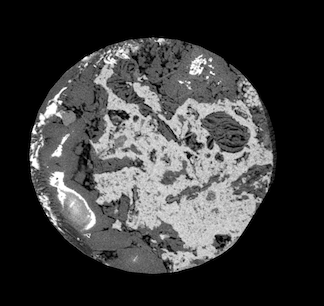

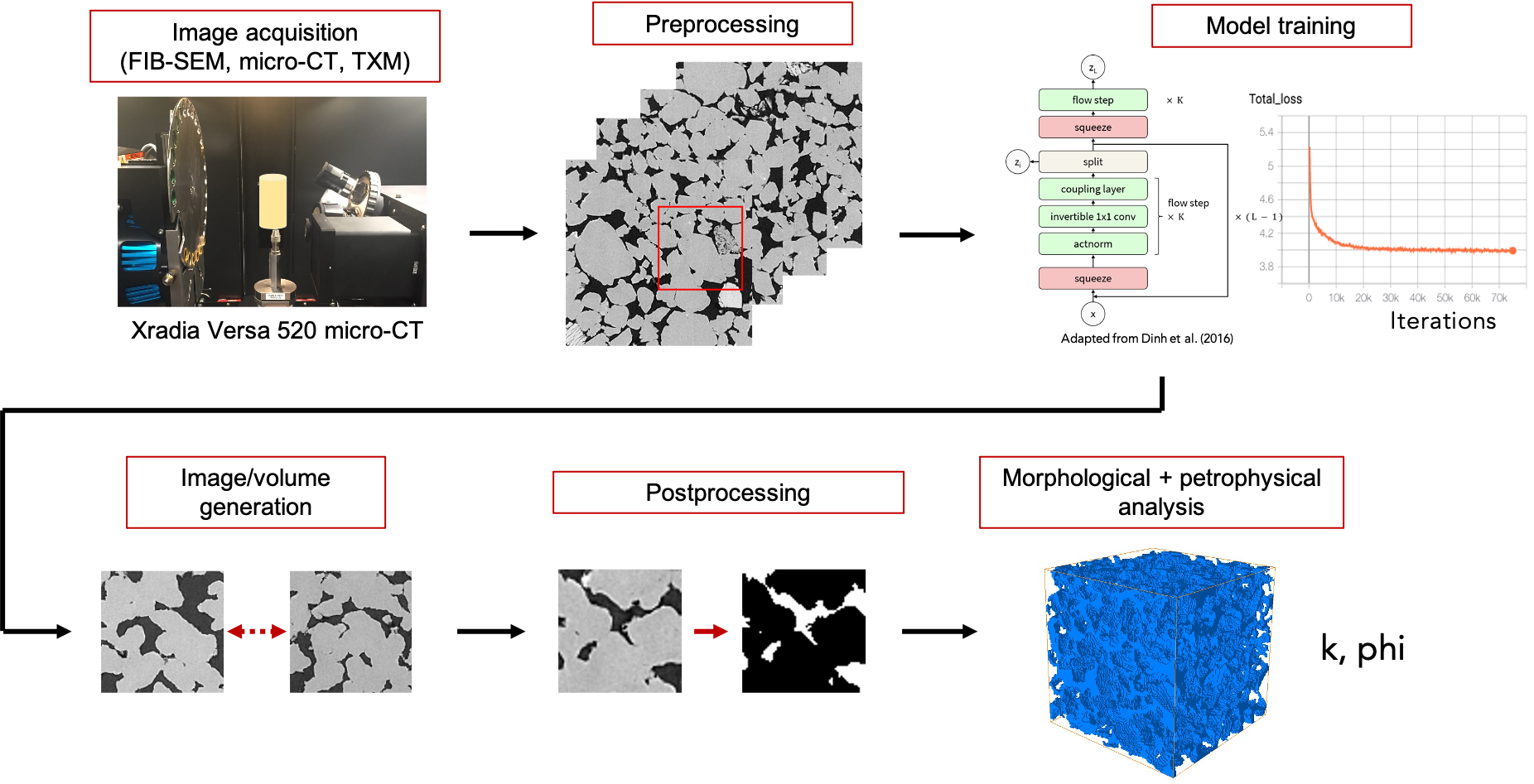

In this line of work we have developed RockFlow, a method to synthesize grayscale, multimodal, and 3D rock images from only 2D training data by exploiting the latent space interpolation property of generative flow models. Using this algorithm, we can synthesize realistic rock image volumes from only 2D training data. This generative model can be used in a broader image-based characterization workflow for source rock samples:

Multimodal images are increasingly important for source rock characterization. Our method is the first to synthesize multimodal source rock images, and the first such model to produce 3D image volumes from only 2D input data:

We hope to apply this method to new combinations of image modalities, and further generalize the approach to accommodate heterogeneous types of data, e.g. NMR response curves with micro-CT images of shales.

Image Downscaling with Single Image Super-Resolution

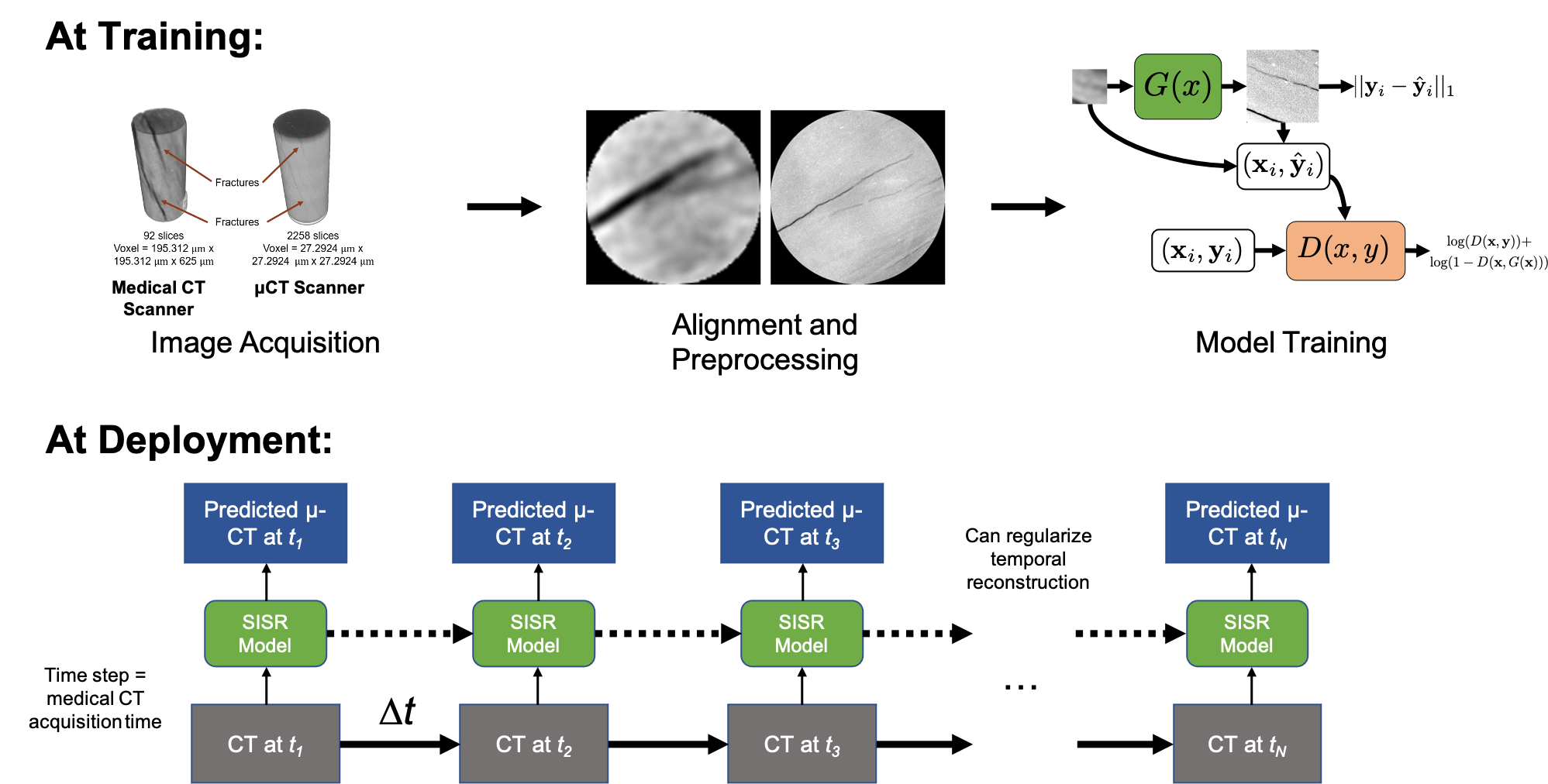

In a supporting capacity, I have also been involved with a project to dynamically image reactive transport processes in shale using single image super-resolution (SISR) models trained with multiscale CT/micro-CT data. Characterizing reactive transport processes in shales require at least microscale resolution. Micro-CT images, however, require minutes to hours to acquire, making micro-CT inapplicable for imaging transport processes taking place on time scales of second or minutes. Medical CT images meanwhile have an order of magnitude lower resolution than micro-CT but have acquisition times 1-2 orders of magnitude smaller. Our goal is to train deep learning SISR models to predict high-resolution micro-CT image volumes from low-resolution medical CT scans, then deploy this model to downscale images acquired during a reactive transport experiment.

This project is still in early development, but our hope is that this imaging setup will enable dynamic imaging of reactive transport processes in shale.